During this year’s process of figuring out what to get the kids for Christmas, my wife suggested a new gaming PC for our youngest. His last dedicated gaming setup was an Alienware laptop, and in addition to having reliability issues and sounding like a hair drier when running graphics-intensive games, it was limited in processing power by its portable form factor. There’s only so much hardware you can stuff into a laptop.

My immediate answer was ‘Great, I’ll start spec’ing out an Alienware desktop’ but she had another idea. ‘Why don’t you build something together?’ she suggested.

I wasn’t too keen on the idea. There’s a lot that can go wrong – all it takes is one incompatible or faulty component to start a chain of returns and exchanges – and I had visions of a half-built PC sitting unused in a corner waiting to be brought to life: The perfect recipe for Christmas disappointment. And living where we do, there’s no Fry’s or MicroCenter nearby so everything would have to be done mail-order.

But my wife insisted that it would be a fun thing to do together, and reminded me I had built many PCs back when that was common practice. I relented, started researching components, and began deciding which components to include in the build.

I wanted to create something that would run nice and quiet even under full load and opted to go with AMD’s Radeon R9 Fury X. Now before you tell me that I should have gotten the Nvidia GeForce GTX 980 Ti which generally benchmarks faster, keeping the noise ceiling low was the deciding factor here.

I’m a huge fan of solid state drives and got a pair of Samsung 500GB Sata SSDs and a 3TB Seagate hard drive for general storage. At $87, the Seagate was just too inexpensive to pass up.

My choice of DRAM was complete overkill – 32GB worth of 3000Mhz DDR4. It was on sale, and nothing will trash performance faster than hitting the operating system page file. Even after accounting for OS overhead and running programs, 32GB can cache a ton of texture maps.

For the motherboard, I got an Asus MAXIMUS VIII compatible with Intel’s latest LGA 1151 CPU’s, and a nice, high-efficiency Thermaltake 750W power supply.

The only real snag I ran into was with the CPU. I could not find an LGA 1151 (“Skylake”) Core i7 anywhere – not online, not brick-and-mortar. I settled for a Core i5…not bad, and easy enough to upgrade later.

Last but not least, I chose an NZXT H440 case which was a nice minimal design devoid of slots for external media devices. This box was going to live primarily on Steam and other downloaded content.

I also ordered an Asus MG MG278Q 27” high frame-rate display which is compatible with ATI’s FreeSync monitor synchronization technology, and a Razor mouse, keyboard, and gaming headset.

Everything arrived via Amazon Prime a few days before Christmas. Whew! I cracked the case on the GPU to make sure that it had the latest CoolerMaster pump; earlier versions were prone to a high-pitched whine. Whew! on that as well.

Christmas morning rolled around, and the youngest had a good time opening box after box in his epic pile of presents.

While we were all still in our pajamas, we cleared off the dining room table to use it as our bench and got to work, our oldest son joining the fun.

The first step was to get a general sense of the case layout and what was going to go where. We then attached the CPU, heatsink, and CPU cooler to the motherboard, populated all four DRAM slots with the 32 gigs of memory, and screwed the motherboard in place inside the case.

We then had to figure out the location of the GPU since it is limited by the length of the hoses attached to its radiator. We chose the uppermost PCI-E slot, re-located the case-mounted fan at the rear to the top, replaced it with the GPU radiator/fan unit, and seated the graphics card itself into the motherboard slot.

We than attached all of the drives and SATA connectors followed by the power supply and connections to motherboard, GPU, fan controller, and drives.

Finally, we attached all of the miscellaneous connectors – front-mounted USB ports and audio, power/reset switches, and LED indicators.

It was time to see if anything worked. We attached the monitor and keyboard, turned on the power supply, and hit the power button.

The Asus motherboard has a pair of very handy old-school seven-segment LEDs mounted in the corner that provide status codes going through the POST (power on self-test) process. It was hanging at “99”, and the monitor was black.

Not great, but the CPU was probably fine, and the status led for the memory indicated normal, so that was good. We looked up the error code for 99 and is said “Super IO Initialization”. Hmmm. Well, somewhere someone was unhappy on the system bus.

In situations like this, you want to start eliminating variables to get down to the most minimal system possible. I suggested pulling the graphics card first. Next power cycle we went straight to the BIOS setup screen. A cursory inspection showed that everything looked good – CPU, memory, and storage.

On to installing the operating system! After a good chunk of time spent trying to create a bootable Windows 10 ISO USB thumb drive using Macs, my oldest son and I finally resurrected an old Windows 7 PC, and tried using the “Windows 10 USB/DVD Download Tool.” That utility turned out to be incapable of recognizing any of our USB drives, but we eventually had success with a free third-party utility. After that, the new machine fired right up into the Windows 10 setup.

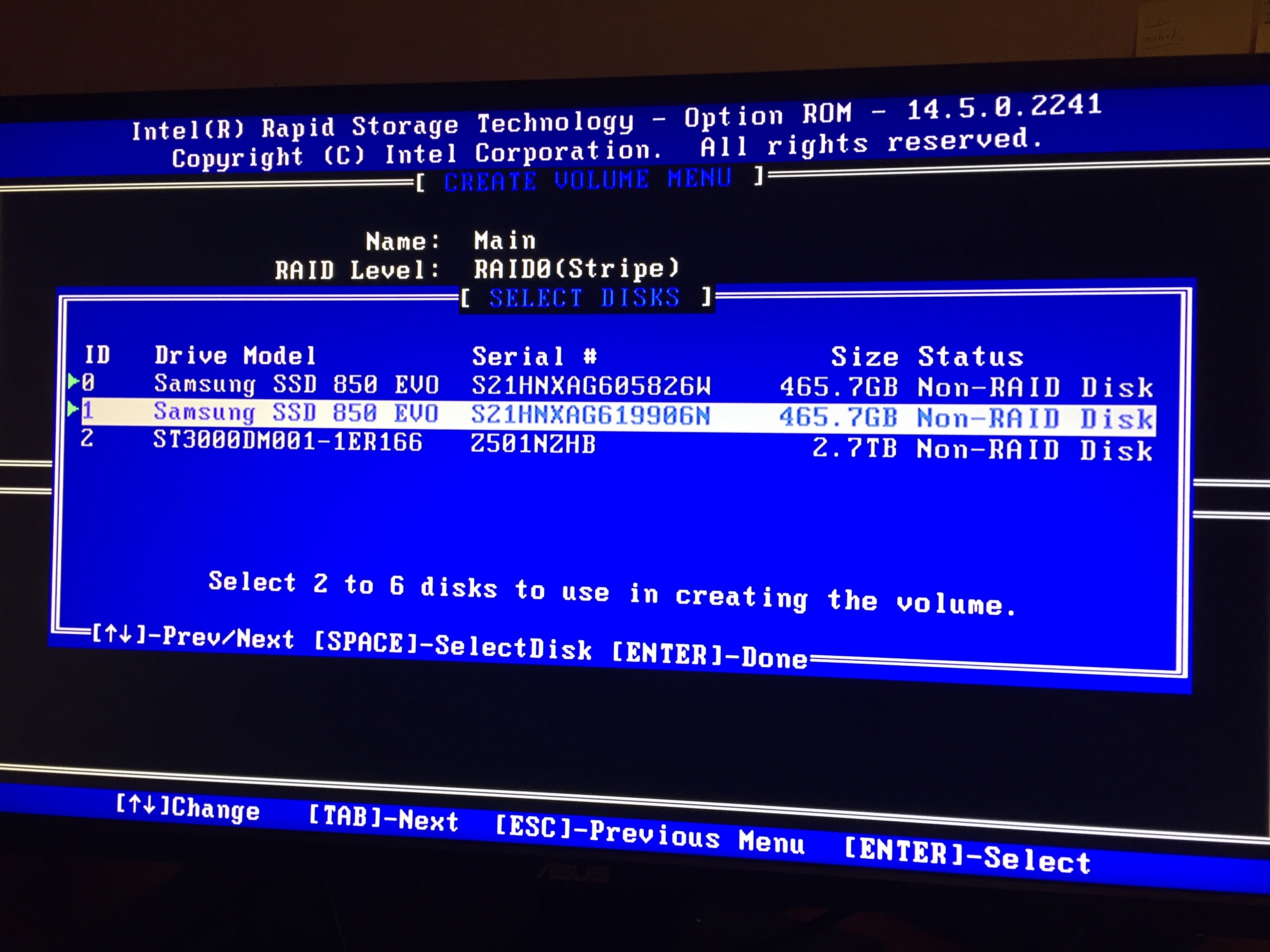

I remembered that I wanted to set up the two SSD’s are a RAID 0 configuration creating an approximately 1TB SSD with interleaved reads and writes for performance. This should be no problem since the motherboard and chipset support RAID. But I wasn’t being given a RAID setup option as part of the BIOS setup. Why? Another stopper.

I tried changing as many settings in the BIOS that I could think of…and still no RAID controller option. One thing that was starting to bother me was the fact that while both SSDs showed up in the BIOS, the Seagate only showed up at the start of the Windows installation process as one of the potential OS installation targets. So it was definitely there and healthy, but why was it missing from the BIOS status?

I went back to the motherboard layout diagram to check where the SATA connections for the three drivers were made. The two SSD’s were at the top of the block, and the Seagate was just below in the next empty socket. The diagram however showed that the SATA block was split – the top two sockets, Intel, the next two, Asus, the rest, Intel. Hmmm. I moved the Seagate’s connector from the Asus block to the rest of the Intel block lower down, powered up, and got the option for Intel’s RAID setup. OK, strange, but that did the trick. Unlike the slick, graphics-based interface for the BIOS settings, the RAID setup screen was plain old DOS character-mode. Wow!

The next day, OS installation proceeded smoothly. At the end of the install, we put the GPU back in place, fired up the rig, and successfully installed the Radeon drivers and control panel.

The only thing left was to see a game in action. A few downloads later, the machine was running an FPS at 2560 x 1440 completely effortlessly with quality cranked all the way up. Success!

Building a gaming PC was a ton of fun, and the only trick will be to figure out another project that we can work on together next year.

the aftermath in empty packaging

A couple of weeks ago, I travelled down south to Florida to run from Miami to Key West. Not all by myself – I was part of a long-distance relay race called the Ragnar. The race series originated in Utah with a run from Logan to Park city

A couple of weeks ago, I travelled down south to Florida to run from Miami to Key West. Not all by myself – I was part of a long-distance relay race called the Ragnar. The race series originated in Utah with a run from Logan to Park city