This is the text of my 5/17/2014 commencement address for the University of Vermont’s graduate college:

Thank you very much for the introduction. It’s an honor and a privilege to be speaking to you today.

This is a very special day for me as well because I received my master’s degree from UVM at a similar May commencement 25 years ago. When I graduated, I did not imagine that I would be returning one day to address a future class of graduates.

I confess that getting ready to speak with you today has posed a real challenge for me. I’m a perfectionist. I wanted to find something to say that each one of you would find useful or at least thought provoking. I wasn’t really sure that I could give the same advice to someone studying historic preservation as someone studying biochemistry or public health, so that goal seemed like a tough engineering problem to me. Also, my preferred presentation format includes lots of Q&A and interactive dialogue rather than simply talking to an audience. I did ponder the possibility of trying something a bit different, but I ultimately decided that it may be too soon to innovate with the commencement address format just yet. And finally, as a UVM graduate, I felt that I had an extra measure of responsibility to this audience given my shared connection to this school and this community.

My path to UVM and to computer science was not a direct one.

My family and I came to the United States as political refugees. It was the late sixties, and my native Hungary was still behind the Iron Curtain. In addition to lacking many other basic freedoms, education was highly controlled and censored by the political system in place, and my parents didn’t want to raise their children in such an artificially limiting environment. We ended up gaining political asylum in the United States, and I had to get busy learning English. Back then, there were no classes in elementary schools for English language learners. But I remember learning lots of English watching The Three Stooges and Bug Bunny cartoons on our small black-and-white TV, and trying to figure out what the characters were saying.

A few years later in the mid-1970’s, the first generation of video game consoles were coming to market, and the first real blockbuster video game was Pong. For those of you haven’t ever heard of Pong, the game consisted of two electronically simulated paddles that could be moved up or down on the screen with a pair of controllers to try to keep a ball – really, just a crude square – bouncing between them. If you missed the ball, your opponent got a point. That was it. But it was a simple, fun, and intuitive game, and the market was eventually flooded with Pong game consoles hooked up to TVs. My brother and I received one as a gift at some point, and after the novelty of playing the game finally wore off, I took it apart to find out what made it work. How did the paddles and the ball get painted on the TV screen? How did the ball know whether or not it missed the paddle? I was determined to find out. Inside the device, there was a printed circuit board with a bunch of components soldered to it, including funny-looking rectangular parts with lots of legs. As I discovered, these were chips. And as I found out, the mysterious process that made the Pong machine work involved those chips, and digital electronics.

In the process of taking the Pong machine apart, I broke it, and when I put it back together it no longer worked. But I still wanted to get to the bottom of the mystery of what made the device work.

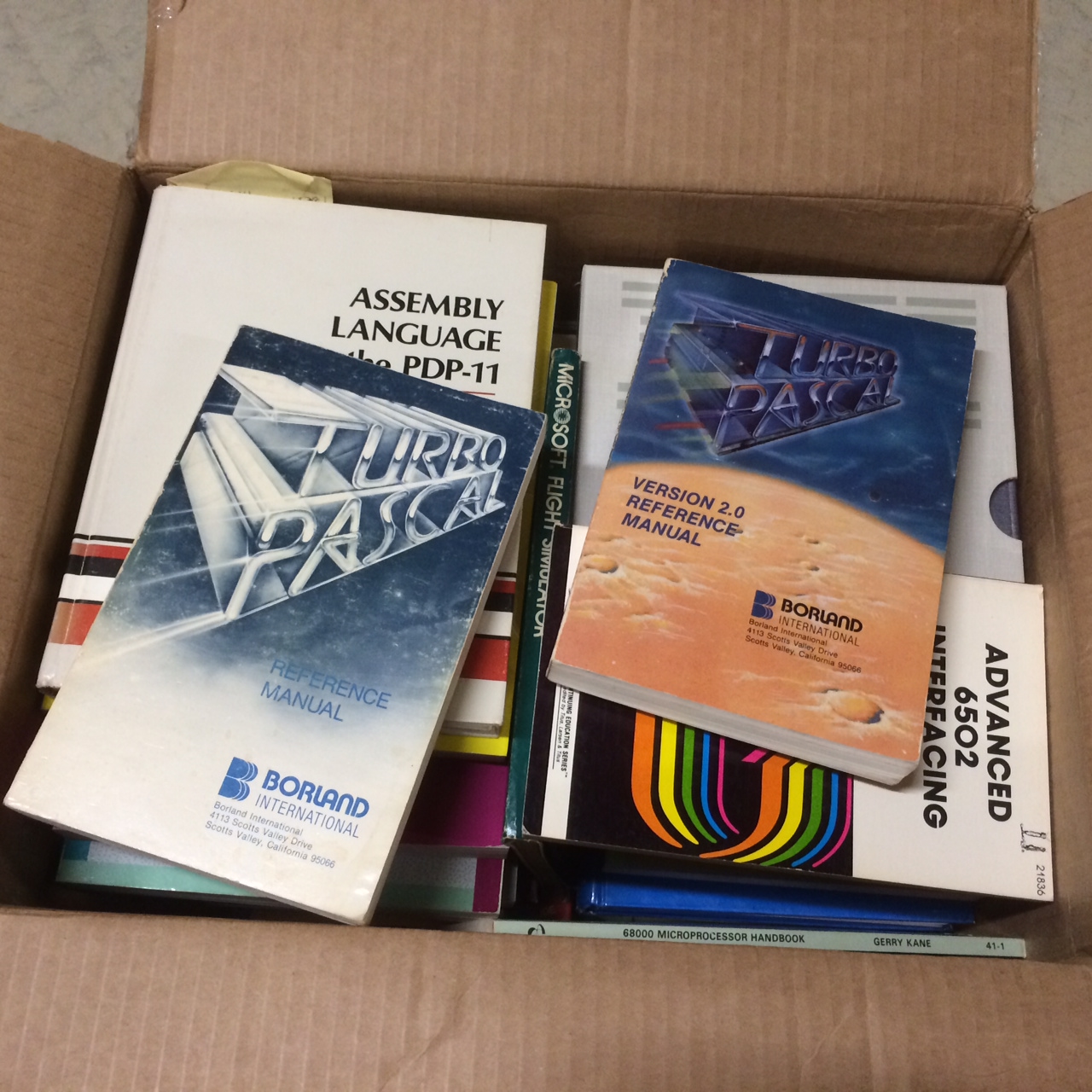

The late 70’s and early 80’s were a golden age for digital electronic hobbyists. Technology was still simple enough to be able to build your own projects from the ground up. I learned to solder, and how to design and make my own printed circuit boards to do things like count up or down, or measure things like temperature, or create sound effects. With enough trips to the local Radio Shack, it seemed you could build anything.

Eventually, my projects got complicated enough that they required being controlled by a computer to be useful, so I taught myself how to program. The majority of my early software efforts were simply a way to bring my hardware projects to life.

I went to college and ended up being a physics major. Middlebury didn’t have a computer science major yet, and besides, computers were still just a side interest of mine.

That changed when I got my first real job after graduating from college. I applied for a programming job in the “help wanted” section of the newspaper. It might be hard to imagine now, but answering help wanted ads in the paper was how people actually found jobs back then. This was the mid-80’s, the PC revolution was just starting to take off, and people who knew how to write software were in high demand. In my case, the people looking for software help looked past the self-taught nature of much of my knowledge and hired me. I dove right in, and re-wrote the tiny company’s bread-and-butter product over the course of a number of months. By now, I was now completely hooked. Not only did I love what I was doing, but I was getting paid for it! It was also fantastic to be able to thumb through a magazine and point to an ad for the product I was responsible for and to be able to say “I wrote that”.

But I also knew that much of what I was able to do was self-taught, and as valuable as teaching yourself is, it has its limits. I felt that there would eventually be a gap between what I wanted to do with technology, and the deeper knowledge that more advanced work would require. I loved the science and the craft of building software, and I wanted to be as good as I could possibly be. That’s how I finally ended up studying computer science at UVM.

When I graduated with my master’s, I could not imagine everything that would unfold in the computer technology area over the next 25 years. In 1989, the PC was still emerging as a mainstream product, the Internet was essentially a research project, and so many things that we take for granted today – everything from mobile phones and connected devices to seamless access to information and connectivity – were still in the future, yet to be invented, created, and developed.

My next three jobs after graduation were software programming jobs, and I wrote many thousands of lines of code and loved programming. But there came a point when I was asked to become a development lead at Microsoft. This role entailed management responsibility in addition to continuing to write software. After some consideration, I agreed, figuring that I could go back to pure software development if the management part of the job became too distracting.

You may know where this is going. About a year later, I was asked to take on even more responsibility as a development manager. This meant an end to me writing code. But it did not mean an end to me being an engineer, and everything I had learned in grad school continued to be incredibly useful, just applied in different ways.

In fact, this was the period of time when I co-founded Xbox. The Xbox effort started as an unofficial side project that was not approved by senior management. I was able to formulate an engineering and technology plan, but now as a manager, I was also able to assemble a small team of volunteers within my group to build the prototype software for Xbox. This working prototype convinced Bill Gates that the idea of creating a console platform using Windows technology was actually feasible.

Later, I led an effort in Microsoft research developing and patenting new technologies in anticipation of a future boom in mobile computing and touch-based interaction for product categories that did not yet exist such as today’s smartphones and tablets.

I also served in general management and architecture roles developing products, product concepts, and designs that were predecessors to modern tablets and e-reader devices.

When I graduated from UVM, I never imagined that I would have a product design portfolio, or patents, or management experience leading teams of hundreds of people. Much of my work since graduate school may not seem directly related to a computer science degree, but from my perspective, all of it was built on the foundation of engineering that I established here.

The basics principles in my field are still true today. Sound engineering practices don’t go out of style, and creative problem solving and innovation still look very much as they did when I graduated.

I have a whiteboard in my office, and I use it to map out designs, processes, architectures, and potential solutions in the same basic way as I would have used it 25 years ago even though today I may be solving organizational or business challenges rather than engineering ones.

Trust the foundation you have established here, and your ability to build your future upon it. Remain open to new possibilities to develop and grow as the future reveals itself to you. And stay curious about how things work even if means that you occasionally take something apart and can’t put it back together as I did with Pong.

I want to wish you the best of luck, and congratulations on your achievement.

Thank you.